I noticed an interesting thing today while attempting to make some parameters on a PowerShell script required.

As I'm just starting to get the hang of PowerShell, you'll have to bear with my script-kiddie tendencies, however, here's the background:

I'm creating a script that will take the following parameters (can you tell I'm still working with TFS?):

-

[string] WorkItemType

-

[string] ProjectsToUse

-

[switch] ExportWitd

I wanted to make the WorkItemType parameter required, and searching around the internet led me to the following code:

[string] $WorkItemType = $(throw 'workItemType is required')

Which does indeed "work" if you're happy with exceptions being thrown if you don't set it:

workItemType is required

At C:\Scripts\UpdateTFSWorItems.ps1:17 char:37

+ [string] $WorkItemType = $(throw <<<< "workItemType is required"),

+ CategoryInfo : OperationStopped: (workItemType is required:String) [], RuntimeException

+ FullyQualifiedErrorId : workItemType is required

And it also doesn't help if more than one parameter is required (you only get the first error), and get-help doesn't help:

PS C:\Scripts> get-help .\UpdateTFSWorItems.ps1

UpdateTFSWorItems.ps1 [[-WorkItemType] <String>] [[-ProjectsToUse] <String>] [-ExportWitd]

As you can see, get-help thinks that WorkItemType is optional (the square brackets around the name and the type).

The actual answer came in the form of the parameter attribute:

[parameter(Mandatory = $true)]

[string] $WorkItemType

Now when I run the script without the WorkItemType parameter I get prompted to supply the required parameters as a read prompt, rather than nasty exceptions:

cmdlet UpdateTFSWorItems.ps1 at command pipeline position 1

Supply values for the following parameters:

WorkItemType:

However, this also has some fairly major consequences:

It converts your script into an "Advanced Function", as some of the documentation on TechNet for this states:

[T]o be recognized as an advanced function (rather than a simple function), a function must have either the CmdletBinding attribute or the Parameter attribute, or both.

There doesn't appear to be a way to apply one or other of these attributes and not be recognised as an advanced function.

Because your script is now "advanced", get-help is a bit different:

PS C:\Scripts> get-help .\UpdateTFSWorItems.ps1

UpdateTFSWorItems.ps1 [-WorkItemType] <String> [[-ProjectsToUse] <String>] [-ExportWitd]

[-Verbose] [-Debug] [-ErrorAction <ActionPreference>] [-WarningAction <ActionPreference>]

[-ErrorVariable <String>] [-WarningVariable <String>] [-OutVariable <String>]

[-OutBuffer <Int32>]

Where did all those extra parameters come from? Your function now supports all the "Common Parameters", this is more clearly stated if you add some help documentation to your script:

<#

.Parameter WorkItemType

Required. The name of the work item type you want to update in TFS.

.Parameter ProjectsToUse

New or Legacy, defaults to New.

#>

Calling get-help now results in the following syntax line being auto-generated:

SYNTAX

C:\Scripts\UpdateTFSWorItems.ps1 [-WorkItemType] <String> [[-ProjectsToUse] <String>]

[-ExportWitd] [<CommonParameters>]

In general though I think this is a bonus, as it allows me to call the write-verbose and write-debug methods to get additional output and breakpoints.

If you're going to go to the effort of adding parameter documentation, you might as well also supply the HelpMessage parameter of the Parameter attribute:

[parameter(Mandatory = $true, HelpMessage = "The name of the work item type you want to update in TFS.")]

[string] $WorkItemType

Which then allows the user to get some help as they are prompted:

cmdlet UpdateTFSWorItems.ps1 at command pipeline position 1

Supply values for the following parameters:

(Type !? for Help.)

WorkItemType: !?

The name of the work item type you want to update in TFS

WorkItemType:

Gotcha with Write-Verbose

Write-host appears to take an array of objects and write a string representation of them out to the screen, which can be (ab)used in "interesting" ways:

write-host "Exporting WIT Definition from TFS Project" $selectedProjects[$i]

Results in output like:

Exporting WIT Definition from TFS Project Generic

Write-verbose (and indeed write-debug) explicitly only take a single string however, and calling them in the same way results in a nasty exception to that effect, so make sure you concatenate them:

write-verbose ("Project folder " + $selectedProjects[$i] + " already exists")

Which is slightly tedious.

Filed under: TFS

Just when you thought it was safe to enter source control

Apparently, we weren't finished with the upgrade to Team Foundation Server 2005. We've upgraded, apparently people are successfully (after a couple of false starts*) checking stuff out, working on it, and checking it back in, so it's all good… Or is it?

The full series

Part 5: In Which Errors Are Noted

You may recall that in Part 3 I had to fiddle around with a few report definitions and import a new work item into the process template. What I forgot to note down in the blog was the fact that I also had to tweak the names of a couple of fields in one of the work items to successfully import - I probably forgot to write about it because it's something I often had to do when mucking around with them.

My long suffering Network Manager pointed out to me that the Application Log on the TFS server Newyork was being filled with the following error:

TF53010: The following error has occurred in a Team Foundation component or extension:

Date (UTC): 03/05/2011 17:57:56

Machine: NEWYORK

Application Domain: TfsJobAgent.exe

Assembly: Microsoft.TeamFoundation.Framework.Server, Version=10.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a; v2.0.50727

Service Host:

Process Details:

Process Name: TFSJobAgent

Process Id: 4008

Thread Id: 1524

Detailed Message:

Adapter: Microsoft.TeamFoundation.WorkItemTracking.WorkItemTrackingWarehouseAdapter

Team Project Collection: Legacy

TF221161: There are conflicting definitions of the following work item fields in the warehouse: System.ExternalLinkCount<->System.ExternalLinkCount (DefaultCollection); System.HyperLinkCount<->System.HyperLinkCount (DefaultCollection); System.AttachedFileCount<->System.AttachedFileCount (DefaultCollection). These conflicting definitions come from different project collections. Work items from project collection Legacy will not be updated until the conflict is resolved. You can resolve the conflict by making the definitions of the field the same in all project collections, or marking the field as non-reportable in the project collection Legacy. For more information, see the Microsoft Web site (http://go.microsoft.com/fwlink/?LinkId=160807).

The link at the end of the error gives you pretty much all the information you need to resolve the issue, but it bears repeating so that I don't forget it again:

Start by doing a quick compare and contrast of the two systems using the Visual Studio 2010 command prompt and the Work Item Template Admin tool (witadmin):

First, call ListFields on both collections to compare their settings:

witadmin listfields /collection:http://newyork:8080/tfs/Legacy /n:System.ExternalLinkCount

This resulted in:

Field: System.ExternalLinkCount

Name: ExternalLinkCount

Type: Integer

Use: System

Field Indexed: False

Reportable As: measure:sum

Calling ListFields with the original collection name resulted in:

Field: System.ExternalLinkCount

Name: External Link Count

Type: Integer

Use: System Field

Indexed: False

Reportable As: measure:sum

Note the spaces in the Name field - this is what's causing the problem - the fields both have the same reference names, but different display names, these need to be in synch to stop the errors.

Seeing as the fields where currently being reported on in the original project collection, it makes sense to use the version with spaces:

witadmin changefield /collection:http://newyork:8080/tfs/Legacy /n:System.ExternalLinkCount /name:"External Link Count"

Note the quotes around the new name value. Finally validate that the field was correctly updated by re-issuing the ListFields command - I won't encourage you to add the optional /noprompt parameter, but it's there if you don't want to confirm each step.

Repeat this for each field listed in the error, and you should now be good to go.

-

Installing the relevant Forward Compatibility Update (2005 and 2008)

-

When connecting to the server, rather than entering just the server name in the "Name" field, supplying the entire path to the project collection:

http://newyork:8080/tfs/Legacy

Filed under: TFS

Yay for short working weeks! I started writing this post last week, and then Easter came along and this week's even shorter as we've been given an extra bank holiday so we can all go and watch the Royal Wedding, which will be fun for all concerned I'm sure - hope the weather holds, but it's not looking great at the moment…

Last time we tried Killing off TFS 2005

We'd started the dry run of our TFS Integration process on the test server TFS10. This had started migrating some of the work items, but also had issues with a missing Work Item Type. I then left it running, and went on holiday…

The full series

State of the nation

I got back to discover that the server had been restarted at some point during the last week (thanks for the mandatory Windows Updates on the servers chaps  ) but after some digging discovered that the process had crashed with an "Out Of Memory" exception. I suppose trying to migrate part of an 11GB TFS instance to another database, on the same server was a bit much to ask. Luckly, TFS10 is a virtual machine, so a quick shut down/re-configure/restart we were back in operation with 6GB of RAM. and I tried again.

) but after some digging discovered that the process had crashed with an "Out Of Memory" exception. I suppose trying to migrate part of an 11GB TFS instance to another database, on the same server was a bit much to ask. Luckly, TFS10 is a virtual machine, so a quick shut down/re-configure/restart we were back in operation with 6GB of RAM. and I tried again.

I imported the missing Work Item Template to the target project, and then all 2,930 Work Items imported without any problems.

However, I had a number of issues with the version control migration, many of which in the end, I've not been able to resolve:

-

The workspace names are a combination of the server name, the current instance GUID and your Session Names, and this must be less than 64 characters or errors will occur - The work arounds for this is are:

-

Change the WorkSpaceRoot application setting in the MigrationToolServers.config file in the TFS Integration Tools install directory to be as short as possible

-

Ensure that the "Name" fields of the Left and Right Version Control Session Sources are short but meaningful.

-

Related to 1 - we've got some historical check-ins to folders that resulted in a total path length of over 260 characters, for various reasons TFS can't cope with this, and other than carrying out the steps in 1, there's nothing you can do about it.

-

Regardless of what the tool states, you can edit the Version Control filter pairs after the configuration has been saved to the database if you're happy to edit the XML directly, just remember to leave off the trailing "/" - however this will result in the Discovery and Analysis phases running again.

-

We've already performed some sort of merge from different projects into one of the projects we're trying to merge, and this caused an issue because the TFS Integration Tool couldn't find a workspace mapping for these old, no longer existing projects - I was hopeful that this could be resolved by adding a new filter pair to try and map them in manually, however I've not been able to confirm this completely.

-

Various Null Reference Exceptions stating that the Path could not be null that caused the migration to stop.

So, in the end, I've had to pull the plug on this experiment, and live with a seperate Team Project Collection for the legacy code base - I'll keep telling myself that "It's probably ok, we're not going to be working on them side-by-side" and things like that, but in the end I'm a little sad that it didn't work.

If you've got a fairly simple, or small history (or you're happy to drop a good portion of it), you know that file paths are short enough then I think the Team Foundation Server Integration Platform is a great tool, but as they warn you throughout their documentation, it really should only be used if you absolutely have to, and you can't live with an upgrade (a note on that - For some reason, on the live TFS2010 box, I actually had to restart TfsJobAgent to get the upgrade to complete, as the new Project Collection wasn't created until I restarted it).

Filed under: TFS

Previously on Killing off Team Foundation Server 2005

As you no doubt recall, I've now got the hang of simple migration projects with the Team Foundation Server Integration Tools. I'm therefore trying out the migration of the actual data (from a backup; remember the /confirmed switch we added to the Upgrade command in Part 1?).

The full series

Part 3: Dry run with upgraded project

The first step was to Download the Process Templates (in Visual Studio, go to Team | Team Project Collection Settings | Process Template Manager) that had been used from the MissouriImported Project Collection, and Upload them into the DefaultCollection Project Collection.

I then tried to create a new Project using the Process Template I'd imported, but it failed on the "Populate Reports" task. As is increasingly the case, the logs are your friend. The cause was that the Reporting Services Data Source names have changed since TFS 2005 - they were originally called TfsOlapReportDS and TFSReportDS - however in TFS 2010 they are now Tfs2010OlapReportDS and TFS2010ReportDS respectively.

This was fairly simple to fix - navigate to the Reports folder in the downloaded process template, open the ReportsTasks.markup file and do a Find and Replace on "/TfsOlapReportDS" and "/TfsReportDS" for the 2010 versions - note the leading slash in there - you want to be replacing the "name" attribute, while leaving the "dnsname" attribute as is - this will allow TFS to find the incorrect version, and update it with the correct one. Save the file, and re-upload the process template. To be safe, I then restarted Visual Studio.

With the updated Process Template in place, I successfully created a couple of new projects using it, and decided to try and migrate the larger of the two projects.

It all started so well. I set up the TFS Integration configurations as per my learnings from the Hands On Lab (HOL), saved it, and started the migration. The tool soon found 1,699 Work Item Change groups (work items, changes/updates and links - there are about 600 work items in that project, we weren't really using it properly), analysed them, and started migrating them - that's when the trouble started.

It turns out that someone added a new Work Item Type to the project without updating the Process Template - so there are just over 200 work items of type "Defect" that have no where to go, which is odd, as the (admittedly out of date) HOL had implied that "the default is to move all work item types" and this is only disabled if you define a mapping - I've not defined any mappings, but clearly it's not going to create it on it's own. It's all the more tedious as the preferred fix is to create a mapping rule, and resolve the issue that way - however it appears at the moment that I have to do this one conflict at a time, and there are currently over 400 and counting so it could take some time. That said, the whole purpose of this test is to iron out these sorts of issues, so what I'm going to do for next time is import the missing Work Item Type.

At the time of me writing this (18:13), the process has been running for about 1.75 hours, it's migrated 1,236 of 1,699 work item "change groups" (after discovering and analysing them) - which is about what I'd expected and discovered over 12,000 Version Control "change groups" - the change set ID's on that server go up to 53,000, however they aren't all in the projects I'm migrating - Sidekicks seems to think it's around 33,000

There now follows a short intermission.

Filed under: TFS

Following on from where we left off in Part 1.

Recap

We've upgraded a copy of the Team Foundation Server 2005 databases to TFS 2010, and connected to it using Visual Studio 2010 to confirm I was happy that the source code, history and work items had indeed been migrated successfully.

I've also installed a copy of VS2003, and the latest version of the MSSCCI Provider to confirm that I'm happy with it in that scenario, as well as confirmed that users that aren't project administrators can also see the history, etc (we'd had issues with this on the VSS - TFS upgrade).

The full series

Part 2: Merging Projects

Now comes the more complicated part: Merging the projects from the upgraded Team Project Collection (MissouriImported). This is made more complicated by the fact that I didn't come up with a sensible name for the new instance of a project in the original TFS 2010 instance (Newyork) - so I now have two projects with the same name in different collections that I'd like to have in the same collection, but with different names.

After I'd installed the TFS Integration Tools (note to self: When it asks for the database server, and defaults to a SQL Express instance, if you're connecting to the default SQL Server instance on a box, you don't want to add the instance name - so just TFS10 and not TFS10\MSSQLSERVER  ) I then tried going through the Getting Started with a TFS to TFS Migration Hands On Lab (HOL) that comes as part of the guidance - I strongly recommend that you do as well to familiarise yourself with the process, however it's not all plain sailing.

) I then tried going through the Getting Started with a TFS to TFS Migration Hands On Lab (HOL) that comes as part of the guidance - I strongly recommend that you do as well to familiarise yourself with the process, however it's not all plain sailing.

Running through the Hands On Lab

Basic Scenario

This worked out quite nicely, but then it's designed to minimise and work around all potential conflicts - as I'm not using the "Visual Studio ALM Rangers HOL base image" mentioned in step 4 of the HOL, I obviosuly had to set up some projects - this I did in much the same way the HOL described. Note that the HOL is a little out of date compared to the tools:

-

When you configure the Version Control and Work Item Tracking sessions, the screen-shots imply that the name of each session is of the form "Project Name (ServerName)", but the tool appears to use "ServerName (Session Type)", so instead of getting "TP-A (tfs10)" I got "tfs10 (VC)" for both the Left and Right sources. You can (and probably should, especally if you're planning on doing a two-way sync) change these names to something more useful.

-

When the HOL asks you to Save and Close you should Save to Database.

As I'm planing on running a "One-way migration" I skipped step 7.3, 7.5 & 7.4 [sic].

Advanced Scenario

This is where the issues really started. As I'm going to be importing into a project with the same team process, I wasn't too fussed about changing the WIT types, however it's good to read through it, as this is the easiest way to move projects to a different process template - something I may be doing at a later point for some of our old projects.

I basically hit an issue with the Version Control (VC) migration, in that the HOL assumes that the VC conflict you're going to see with the BuildProcessTemplates folders will result in the error "A content conflict has been detected…" with an option to use the "Always take the source system's changes" resolution, however this wasn't available to me, as the error I received was "A namespace conflict has been detected between the source and the target system…" which meant I had to provide "merged changesets for the source and target system (requires a manual merge)". This is rather tedious as the files themselves are identical when compared beyond their check-in timestamps, and I couldn't work out what it really wanted - providing some rather arbitrary changeset ids resulted in no source code at all being migrated.

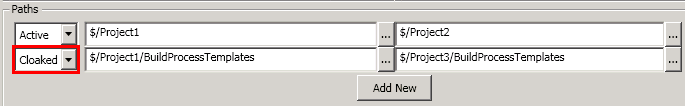

In the end I worked around it by effectively ignoring the BuildProcessTemplates folder:

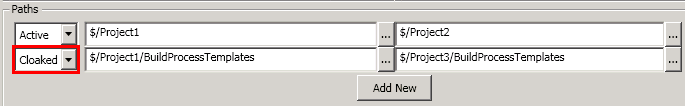

In the Version Control Session definition, I added a new Paths rule, set it to "Cloaked" for the BuildProcessTemplates paths:

With this rule in place, the duplicate BuildProcessTemplates folder and XAML files were ignored by the migration, and it completed smoothly.

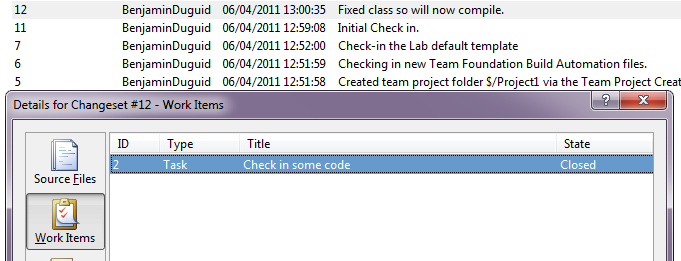

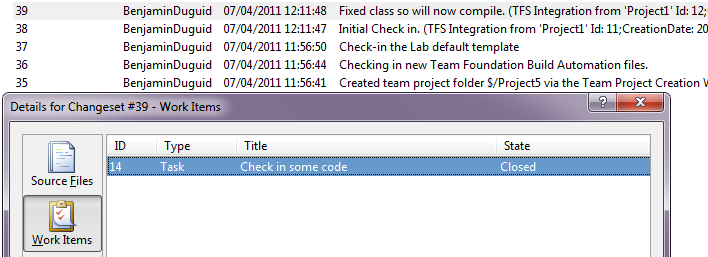

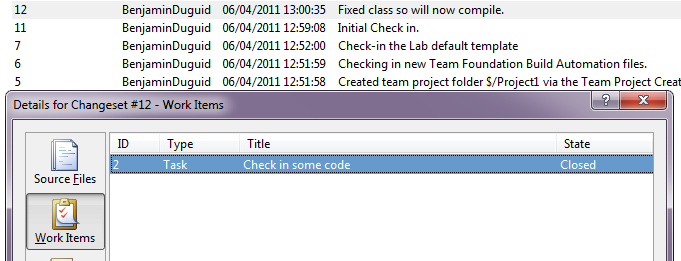

As an example, here's the work items list for a check-in on Project1:

As you can hopefully see, this check-in has a comment, and is associated with a closed task work item (2).

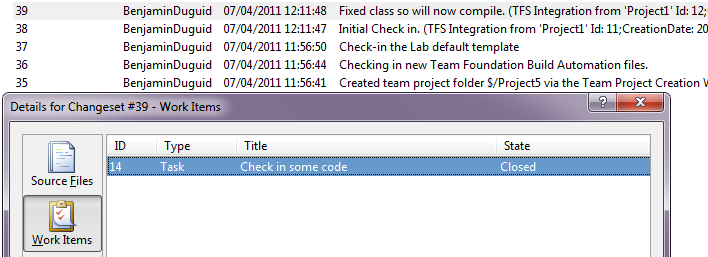

Here's the equivalent for the migrated project:

This is associated with the migrated task (14), and the check-in comment has been carried across, and had the details of where it came from, along with the original check-in details (changeset ID and timestamp).

I'm therefore fairly optimistic now for the dry run of the main project migration, even though it's got over 3GB of "stuff" under source control (I use the word "Source" advisedly there, there's a lot of other things in there, from binaries to SDO packages and beyond).

I'll let you know how it goes.

Filed under: TFS

I'm in the process of upgrading an instance of TFS2005 to 2010, with the added complication of wanting to combine the projects into an existing TFS 2010 Project Collection.

The full series

What am I doing?

As I've had to hunt around on the internet for various steps in the process, I thought I'd try and pull it all together in one place, with the caveat of "it worked for me on my servers", your mileage may vary (considerably) and I take no responsibility for any loss of data, source code, etc. that you may experience (however, I do have to take full responsibility for any loss of data, source code, etc that my team experiences  ).

).

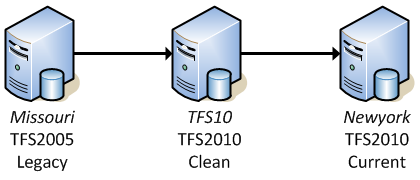

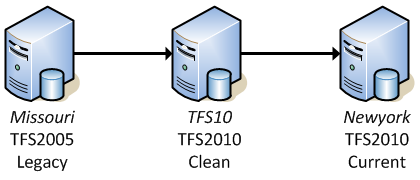

To set the scene then, we have the following servers involved in this process, because I want to minimise the downtime that the teams experience while I'm doing this, and the upgrade can't be undone:

-

Missouri - Legacy codebase running on TFS 2005 (upgraded from VSS), using a local instance of SQL Server 2005.

-

Newyork - Current codebase running on TFS 2010 (upgraded from 2008), using a remote SQL Server 2008 instance.

-

TFS10 - Clean install of TFS 2010 using a local instance of SQL Server 2008 for me to play around on.

I found the following resources quite useful as well:

Part 1: Migration Upgrade from TFS 2005 to TFS 2010

These are the steps I took to migrate our TFS 2005 instance (Missouri) to the clean TFS 2010 instance (TFS10):

-

Copy the latest backup files from Missouri to TFS10 of the following databases:

-

TFSActivityLogging

-

TFSBuild

-

TFSIntegration

-

TFSVersionControl

-

TFSWarehouse

-

TFSWorkItemTracking

-

TFSWorkItemTrackingAttachments

-

Stop the TFS services using the command line tool found in Tools folder of your TFS installation:

TFSServiceControl quiesce

-

Create new databases on TFS10 with the same names as the backups, and restore the backups into these - Note to self: when restoring a database over an existing one, make sure you change the file path on the options pane to the correct destination file path.

-

Because my friendly SysAdmin had kindly installed TFS for me, opening the TFS Administration Console didn't give me the option of starting the TFS Configuration Tool that's mentioned in the upgrade steps, however, you can run the upgrade process from the command line (again, in the TFS Tools folder):

tfsconfig import /sqlInstance:tfs10 /collectionName:MissouriImported /confirmed

Obviously, your SQL Instance and Collection Name will be different, but you get the point.

This will take a while to run - anecdotal reports are "between 30 and 180 hours", depending on the size of your repository, and the amount of processing resources available. Mine took around 15 minutes on a 10GB version control database and a 76MB work item tracking database.

-

If all goes well, the command window should report that it's completed "

Collection creation step 208 of 208", and that "The Upgrade operation on the ApplicationTier feature has completed. There were 0 errors and 0 warnings.". I can't really advise on what to do if either of those don't happen for you, other than to say "Take a look in the log file", which has quite a lot of detail in it.

-

Restart the TFS services:

TFSServiceControl unquiesce

-

Connect an instance of Visual Studio at the new Project Collection and verify that things look ok. You might also want to try connecting VS2003 (yes, some of the code in there is indeed ASP.NET 1.1, which pretty much requires VS2003, some of it is older still, and is classic ASP/VBScript

)

)

More to follow shortly.

Filed under: TFS

I thought I'd start a new series focusing on what I'm currently working on - Tridion from SDL.

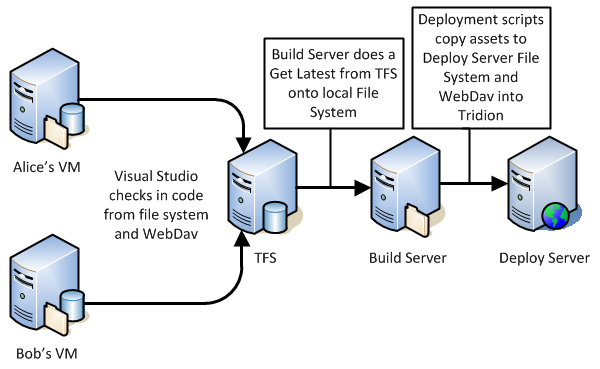

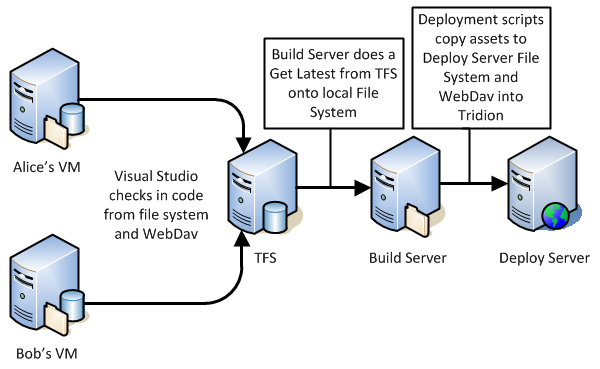

The key thing I'm trying to solve at the moment is the deployment of work from one environment to another - initially one developer machine to another, then onto a shared build/deployment setup, then on through QA, User Acceptance Testing and onto live.

We've already done similar things with SharePoint, so we have a baseline of what we're trying to achieve, so it shouldn't be completely impossible.

Seeing as we're working on our C# code, CSS, JavaScript, etc in Visual Studio, tracking our tasks and bugs in TFS, and a build process built around this that takes these assets, compiles them, and generates some scripts to be run on the various environments to deploy them, we felt we'd like to try and utilise this for Tridion elements as well.

The first thing we tried, apparently the "recommended" way, was to use Content Porter. We wanted to prepare a "base" image that we could easily roll out to developers to start working on. We spent the better part of two days trying to break down the solution into small enough packages that we could reliably import them without it throwing an error and rolling back the last hours attempt.

We felt that not only was this frustrating, but also it wouldn't scale nicely - it relies on the developers remembering exactly what they've changed, manually exporting them out with CP - I'm sure it's possible but it does leave a large margin of error.

What we came up with was the following "ideal plan", following a standard "Good, Better Best" approach:

|

|

Good

(For deployment to QA)

|

Better |

Best

(For deployment to live)

|

Good, Better, Best Tridion to TFS

|

Manual Steps |

Existing content: Checkout in TFS, edit where appropriate.

New content: Add to TFS via WebDav.

Check in pending changes (including associating work items).

|

Check in pending changes (including associating work items). |

Check in pending changes (including associating work items). |

Build Solution

For Automation |

Automate the creation of TFS workspaces so processes can "Get Latest" straight from TFS into Tridion via WebDav. |

Hook into Tridion's Pre-Save event to check-out TFS version, or add to TFS. Warn user of potential issues

|

Automate the creation of publications in Tridion. |

We've started this, and hit our first stumbling block - some content appears to be coming back from webdav as UTF8, while some it coming back as UTF16 - which seems to be confusing TFS when doing a check in, throwing up an error such as:

Visual Studio

z:\300 Design\Building Blocks\System\btn_conference.gif.gif: TF203083: The checksum value of the file that you uploaded does not match the provided value.

Which generally went away if I tried to check the file in a second time.

This seems to be more of a problem with TFS than Tridion or WebDav - an old post from Buck Hodges "How TFS Version Control determines a file's encoding" seems to hint at difficulties with encoding, especially around:

Unfortunately, TFS does not support changing the encoding of a pending add. If you need to do that, you will have to undo the pending add, and then re-add the file using the command line and specify the /type option.

We think we've found a solution - using a different mechanism to map the WebDav folder to a network drive seems to result in TFS seeing consistent encodings. so this feels like a good place to stop, and report back on progress later.

Filed under: TFS

More TFS fun and games:

Team Foundation Server 2010 will happily install and configure Reporting Server for you to power all your reporting needs, but I've come up against the following issue when attempting to modify the existing reports, or create new ones:

When you click on "Report Builder" from the Reporting Services web interface, it opens up the click once application "Report Builder 1.0".

This application cannot open the reports that come with TFS process templates, and that are installed on Reporting Services - the error it reports is:

Cannot open this item

This report cannot be opened in Report Builder.

And if you check the details you see:

System.IO.StreamReader: The Report element was not found.

Which is odd, seeing as if I open the .rdl file in a text editor, I can clearly see the root Report element in there.

If you get the latest (at the time of writing 3.0) version of Report Builder, it will not connect to Reporting Services - it keeps claiming that either the services aren't configured, or I don't have permissions.

However, if you install version 2 of Report Builder, almost all your woes go away, and you can open and edit reports to your hearts content.

The only outstanding woe I had was when I opened a report from the server, edited it, and saved it and then my report appeared to break, claiming that the TfsOlapReportDS was invalid. To resolve that, I had to switch to the Properties tab of the report in the browser, select DataSources and re-map the report to the correct shared data source - once I'd done that, all future saves worked fine.

Filed under: TFS

We're in the process of moving to Team Foundation Server 2010, and as part of the process, we're updating the process template we're using, creating branded versions of the default documents, etc. Also, with new projects kicking off we're able to really sort out which process template we're using.

However, while we're ironing these changes out, we obviously need to actually do some work - so to that end we'd tweaked a template, created our project using that, and in the background we can work on the template for other projects.

This has left us with two things:

-

A number of projects in our test Project Collection with portals in the wrong place in SharePoint.

-

A number of projects using out of date portals.

At the moment, we've not made any changes to the contents in SharePoint (beyond access rights), we're mostly just using the dashboards and reports, so the cost of recreating these templates isn't that high however I was having a lot of difficulty working out how to move existing portals, or how to rebuild a portal after creating the project - the New Team Project wizard includes the option "Do not configure a SharePoint site at this time", with no real guidance on how to create one later.

As this is currently looking at WSS, and these aren't publishing sites, I can't move them easily in SharePoint. We tried exporting them, and importing them into the new location, but that also didn't work - errors about a template missing from the _forms folder (I guess the default template for the excel reports document library).

I tried creating a new site in SharePoint using the TFS templates supplied, but that didn't pick up my changes to the template, nor set up/create the shared documents, or the project dashboard. I also tried creating a dummy project with a portal in the right place, disconnecting the portal in TFS, then pointing the right project at the portal and finally deleting the dummy project, which worked, but was going to be a lot of work for all the other projects.

Finally, I took a look at the actions included in the tfpt command line tool from the Team Foundation Server Power Tools and noticed:

addprojectportal Add or move portal for an existing team project

Pass this the path to TFS, the project and process template you want to move, and optionally the details for the SharePoint instance you want to use and away it goes, creating a new Project Portal for you with all the modifications you've made.

I would point out the following issue I've had with this tool, but it may be due to my usage of it: when I pointed this at a project that already had a portal, I expected the tool to move the portal for me, however (probably because the project was built using a different Process Template) I now have two portals for this project, the old one, and the new one, not a big deal, I can easily delete the old portal - TFS seems to be happily pointing at the new one.

Running it without the /verbose option seemed to fail - and the logs weren't very helpful. Running the same command with /verbose resulted in a successful run. I may have just been unlucky.

Morals of this post:

-

Always check the power tools first.

-

MS have a habit of not finishing things, and releasing the bits you actually need as power tools.

-

Mario Rodriguez rocks.

Filed under: TFS

So, there are lots of exciting new changes in VS 2k8 SP1, two of which have already made me very happy, and one of which also helps:

The two that really me are in the Source Control Explorer window:

-

Local Path is now blue and underlined - that's right it's now an explorer link that will open up Windows Explorer with your mapped path - no more "Select local path, Ctrl+C, Windows+R, Ctrl+V, Enter" for me, just "click".

-

Add to source control - This dialog by default now lists the files you can add to source control from the currently mapped local path, with existing files hidden - hooray! That's one of my main issues with TFS fixed

.

.

And the other change? They've updated the Visual Studio Icon to include the 9 in a box, so in a list of running programs (start bar, program lists, Alt+Tab windows) it's easy to tell at a glance which is the VS 2k8 version and which is VS 2k3.

Filed under: TFS

) but after some digging discovered that the process had crashed with an "Out Of Memory" exception. I suppose trying to migrate part of an 11GB TFS instance to another database, on the same server was a bit much to ask. Luckly, TFS10 is a virtual machine, so a quick shut down/re-configure/restart we were back in operation with 6GB of RAM. and I tried again.

) but after some digging discovered that the process had crashed with an "Out Of Memory" exception. I suppose trying to migrate part of an 11GB TFS instance to another database, on the same server was a bit much to ask. Luckly, TFS10 is a virtual machine, so a quick shut down/re-configure/restart we were back in operation with 6GB of RAM. and I tried again.

)

)

.

.